AI is no longer a distant workplace innovation — it is now embedded in hiring, performance management, monitoring, and decision-making. According to a McKinsey study (July 2024), 78% of organisations use AI in at least one business function, a figure set to rise as adoption accelerates.

For UK employers, this shift brings significant legal and compliance risks. The rapid rollout of AI into HR and operational functions intersects with UK data protection law and contractual liability, demanding proactive legal management.

The risks often overlap and can amplify each other. For example, discriminatory bias may also amount to unlawful automated decision-making (ADM) under the UK GDPR.

This article examines: (1) the principal legal and compliance risks when deploying AI in the workplace, and (2) practical mitigation measures employers should adopt now to reduce exposure to claims, regulatory action, and reputational damage.

Five key legal and compliance risks

Workplace AI tools raise five interlinked legal and compliance risks for UK employers:

- Data protection, transparency and explainability;

- Discrimination and bias;

- Automated decision-making (ADM);

- Employee monitoring and surveillance; and

- Liability uncertainty between employer and AI provider.

These risks are often interconnected. Addressing them in isolation risks gaps in compliance; accordingly they should be considered as part of a single, coherent AI governance strategy.

Data protection

AI tools used the workplace may process large volumes of personal data, including performance metrics, communication and other HR records. Inappropriate or excessive collection; opaque processing methods using AI; or processing data beyond the original purpose may all breach the UK GDPR principles of lawfulness, fairness, purpose limitation, and transparency. AI systems should be capable of providing transparency over their decision logic and ensuring explainability of outputs so that individuals can understand how decisions affecting them are made. Sharing personal data with an AI system or provider without appropriate technical or organisational security measures, and without contractual restrictions on onward disclosure or unauthorised secondary use, such data sharing increases the risk of a data breach, with potential harm to employees and liability for the employer. The data protection implications of AI are not confined to general HR systems as discrimination and bias, automated decision-making (ADM), and employee monitoring or surveillance each engage core UK GDPR requirements and must be assessed as part of the same compliance framework. We further take a look at those specific risks below.

Automated decision-making (ADM)

Article 22 UK GDPR historically gave individuals the right not to be subject to decisions based solely on automated processing that produce legal or similarly significant effects. The Data (Use and Access) Act 2025 replaced Article 22 with Articles 22A–22D, allowing ADM under any Article 6 lawful basis provided the Article 22C safeguards are implemented. This flexibility does not extend to ADM involving special category personal data, which normally requires explicit consent. Consent is rarely valid in employment contexts.

Discrimination and bias

Generative AI (GenAI) tools may produce unfair or discriminatory outcomes due to algorithmic bias in training data. Where personal data is used, such bias can amount to unlawful processing under the UK GDPR, breaching the principles of fairness and accuracy, and potentially triggering obligations under Articles 9 and 22 if special category data or ADM is involved.

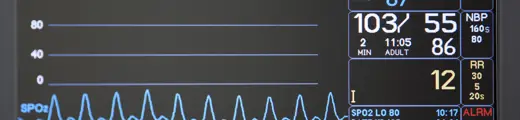

Employee monitoring and surveillance

AI-powered monitoring tools can track productivity, attendance, and even emotional wellbeing (e.g., sentiment analysis to assess burnout). Where these tools process personal data, unjustified or excessive monitoring may breach UK GDPR principles of lawfulness, fairness, transparency and data minimisation, and infringe the right to privacy under Article 8 ECHR, as incorporated by the Human Rights Act 1998. Lack of transparency can also breach Articles 13–15 UK GDPR.

Liability uncertainty

Responsibility for inaccurate, biased, or harmful AI outputs is often unclear between employer and provider. Personal data breaches, inadequate safeguards, or failure to honour data subject rights can lead to liability under UK GDPR. AI providers frequently include contractual disclaimers or broad liability caps to avoid responsibility for decision-logic errors, bias, or data-quality issues.

Practical mitigation measures

While the specific application will vary by sector and AI use case, a consistent set of measures can help employers reduce exposure to all five risk areas identified above. These include: (1) UK GDPR compliance controls, and (2) contractual and operational guard-rails.

UK GDPR compliance controls

Employers should consider the following steps:

- Conduct a DPIA assessing necessity, proportionality, risks, and safeguards; documenting the lawful basis under Article 6 and; and avoiding or strictly controlling the processing of special category data, even where an Article 9 condition exists. The DPIA should be carried out before any deployment of the AI tool and should also explicitly address bias detection, accuracy testing, and audits of decision logic and training data.

- Ensure transparency by updating employee privacy notices to explain where and how AI is used, the lawful basis for processing, potential effects, and safeguards; and by providing meaningful information about any ADM and the routes available for human review or appeal. Where relevant, give concrete examples (e.g., recruitment screening or attendance monitoring) and ensure explanations are clear and accessible.

- Implement ADM safeguards as required by Articles 22A–22D UK GDPR, as amended by the Data (Use and Access) Act 2025, by ensuring that, where decisions are solely automated, the conditions in Article 22B are met and the safeguards in Article 22C (meaningful human involvement, the right to contest an ADM, and an explanation of the reasoning) are in effect; and by ensuring no ADM can be based on special category data unless a valid Article 9 condition applies.

- Apply robust data governance and security measures by implementing data minimisation and accuracy controls, role-based access restrictions, retention limits, logging, and technical/organisational measures proportionate to risk; ensuring that any international transfers are managed through the EU SCCs, International Data Transfer Agreement (IDTA) or UK Addendum where required; and applying contractual restrictions and robust safeguards when sharing personal data with any external AI provider.

- Maintain ongoing assurance processes by scheduling regular bias and accuracy testing, monitoring for model drift, and conducting periodic reviews; and by documenting these outcomes to demonstrate compliance to regulators if required. Where compliance gaps cannot be resolved, the AI tool should not be deployed.

Contractual and operational guard-rails

Employers should also implement these recommended measures in contracts with AI providers:

- Include or review data processing clauses that comply with Article 28 UK GDPR; define the parties’ roles (controller or processor) and processing instructions, prohibit unauthorised secondary use of data or training on employer-provided data without prior written approval, and, where permitted, ensure such use occurs only on properly anonymised datasets. For AI-based monitoring tools, the contract should also contain explicit prohibitions on unauthorised secondary use of monitoring data.

- Require warranties and transparency obligations confirming that training datasets have been lawfully sourced, that the AI model has been tested and meets agreed performance standards, and that the AI provider will disclose relevant model documentation (such as model cards), decision-logic summaries, and testing reports.

- Secure audit and oversight rights that allow the employer to conduct inspections, access documentation, and require cooperation on DPIAs or data subject rights requests; to approve or reject material model or version updates; and to suspend processing if safeguards fail.

- Mandate security and data breach terms requiring robust technical and organisational measures, effective controls over sub-processors, and clear breach-notification timelines that align with UK GDPR requirements.

- Allocate liability fairly by negotiating reasonable caps proportionate to the risk profile, securing indemnities where the provider’s fault causes a regulatory breach or third-party claim, and obligating the provider to provide costed cooperation in investigations and remedial actions.

- Establish operational controls such as approval gates before roll-out, maintaining decision logs for significant AI-driven outcomes, and requiring mandatory human review before finalising any decision that has legal or similarly significant effects on an individual.

Comment

AI can significantly enhance efficiency and decision-making in the workplace, but without robust governance it poses material legal and compliance risks. By assessing potential impacts through a UK GDPR framework, including bias, automated decision-making, employee monitoring, data protection compliance, and liability allocation, and applying the practical and contractual measures outlined above, employers can harness AI’s benefits while reducing the likelihood of regulatory enforcement, litigation, and reputational damage.

Related item: The UK DUA Act’s Reform Pillars: Divergence from the EU GDPR

Aviation

Aviation

Banking and finance

Banking and finance

Construction and engineering

Construction and engineering

Education

Education

Healthcare

Healthcare

Information technology

Information technology

Insurance and reinsurance

Insurance and reinsurance

Life sciences

Life sciences

Public sector

Public sector

Rail

Rail

Retail

Retail

Shipping and international trade

Shipping and international trade

Sport

Sport

Transport and logistics

Transport and logistics

Travel and tourism

Travel and tourism

United Kingdom

United Kingdom

Denmark

Denmark

France

France

Ireland

Ireland

Spain

Spain